Yes, the irony of using a bot to build bot protection is not lost on me. But the experience taught me something. Development hasn't gotten easier with AI. It's gotten more intense.

The Postmark Incident

Zero Waste Tickets is a side project of mine. Real users, real traffic, nothing massive. The login flow is passwordless. You enter your email address and the app sends you a code. No passwords to manage, no credentials to store. Simple.

Too simple, it turns out, if you don't protect the form.

Last September, Postmark paused sending on my account. Polite email, no drama, but the message was clear: they'd spotted anomalous sending patterns and flagged it as potential abuse.

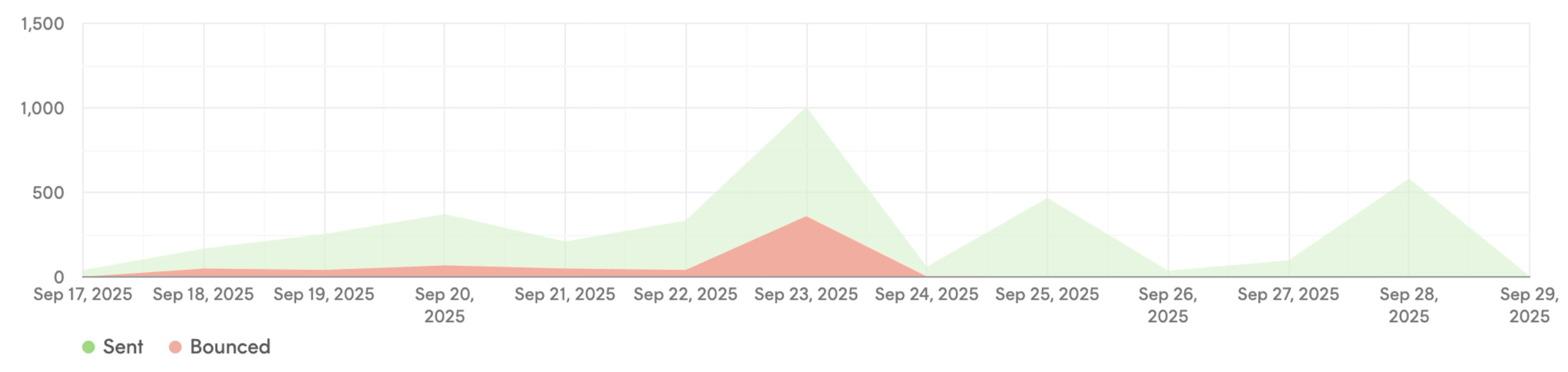

You can see in the graph below that the site doesn't have that many users. It's a small side project in a closed beta so only really used by friends and friends of friends. But, you can also see in that graph that email bounces had been slowly increasing, then had a massive surge on 23rd September:

The investigation didn't take long. Bots had been hammering the login form. Every submission triggered an email with a login code. Postmark's message suggested that my API token might have been compromised. It hadn't, it was just that my basic bot protection had failed.

When I first built the site several years ago I had no protection. But, I noticed some fake login attempts in the logs, so I implemented a basic honeypot field. A field that's invisible to regular users, but bots fill in. I would detect the field had a value and reject the submission. It had been working fine for years. But then the error rate started to climb slowly. Then the honeypot stopped catching them, and the volume was enough to trip Postmark's detection.

The Weekend Fix

I put Cloudflare in front of the site as an emergency response, which bought some time. But Cloudflare was having its own reliability issues around then, and I'd rather not make my users' access to a side project contingent on a third party. I like to keep dependencies minimal, and this is a project I use for learning and experimenting. I wanted to understand the problem, not outsource it.

What I didn't want was a captcha. Annoying UX, terrible privacy. I don't want my users identifying motorbikes and fire hydrants to log in.

I hate proof-of-work in principle, because of the wasted effort. It goes against the Zero Waste Tickets ethos. But I needed something that would not get in the users' way but would trip up attackers or at least slow them down to the point where it's not worth it. I'm just adding it to the login form, as the rest of the site is protected by the login session. So I figured the waste was minimal for just that one form if it worked to stop the spammer.

I built it by hand over a weekend. Before the server accepts a form submission, the browser has to solve a small computational puzzle. A hash challenge running in a Web Worker so it wouldn't block the UI. The server generates a challenge, the client computes the answer, the server verifies it before processing the form. Nothing fancy. Rust on the backend, a bit of JavaScript on the front.

It worked. The spam dropped off. Postmark was happy. I moved on.

That could have been the end of the story.

The Descent

A few months later I came back to the problem. Not because the proof of work stopped working. It's still working fine. I came back because I'm helping my wife get her site off Squarespace and she needs a contact form. That means bot protection. So what if I extracted the bot protection from ZWT and put it into its own reusable service?

That's where things escalated.

Before AI, a "weekend project" for me was: implement a proof-of-work challenge on a login form. Research the approach, write the hash function, wire up the Web Worker, build the server verification, test it, ship it. A focused, self-contained piece of work.

After AI, a "weekend project" is: multiple challenge algorithms, a broker that selects the right one based on risk signals, dynamic difficulty scaling, behavioural analysis. You're halfway to accidentally reinventing Cloudflare.

Over-engineering used to be self-limiting because building things took time. You'd think "what if I added dynamic difficulty scaling?" and then you'd put it on the ever-growing list of things to maybe get to later. That brake is gone. With Claude Code, every one of those ideas is achievable in the time it used to take to build just one.

And "weekend" is generous too. It's really a few hours here and there, squeezed in when I find time.

The answer isn't to resist every impulse to overengineer. Some of that expanded scope is genuinely good. The challenge broker is real architecture that solves a real problem. Dynamic difficulty is good protection.

Being Honest

Zero Waste Tickets doesn't get enough traffic to justify any of this. The original proof of work solved the problem.

The Postmark incident was real. The learning was real. The increased potential is real. But so is the cognitive load. Every "what if" that the AI makes achievable is another thing to evaluate, review, and maintain. The temptation to overengineer isn't free. It takes mental energy to resist it, and more energy when you don't.

A recent HBR article by Ranganathan and Ye, "AI Doesn't Reduce Work—It Intensifies It", found exactly this. They studied 200 employees at a tech company over eight months. Nobody was asked to do more. But with AI tools available, they voluntarily expanded their own workloads. The researchers described "a sense of always juggling, even as the work felt productive." That's the feeling.

I had a realisation recently while in a supermarket. There was one person on the old-style tills, scanning items, chatting to people, making the experience human. And there was one person on the self-scan checkouts dealing with twelve tills at once, running from one to the next, helping frustrated customers whose machines weren't working, in constant demand. That's what coding with AI agents is like. You're not doing less. You're supervising more, across more fronts, with less downtime between decisions. Except nobody made you move to the self-scan area. You walked over there yourself, because the machines looked faster.

Development hasn't really gotten any easier with AI. It's got more intense.